David A.

Kenny Twitter (X) Polls

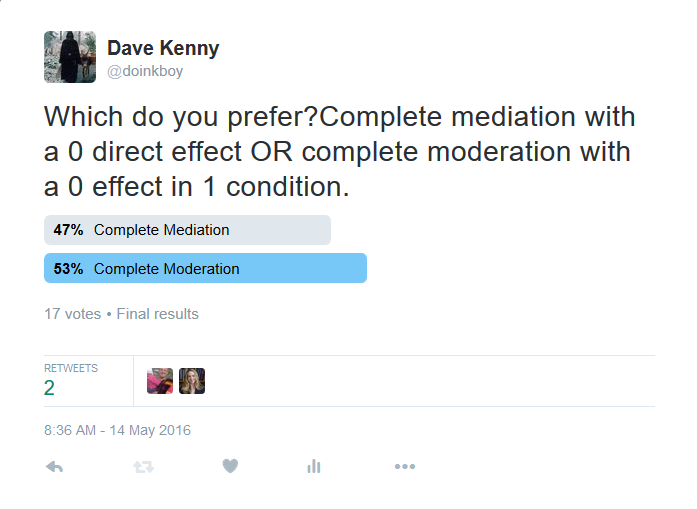

Poll #1: Mediation vs. Moderation

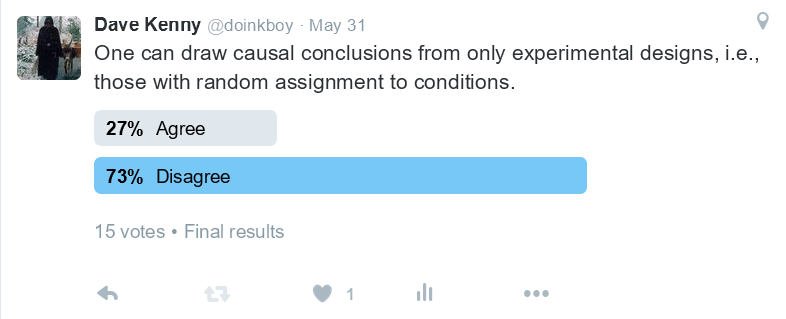

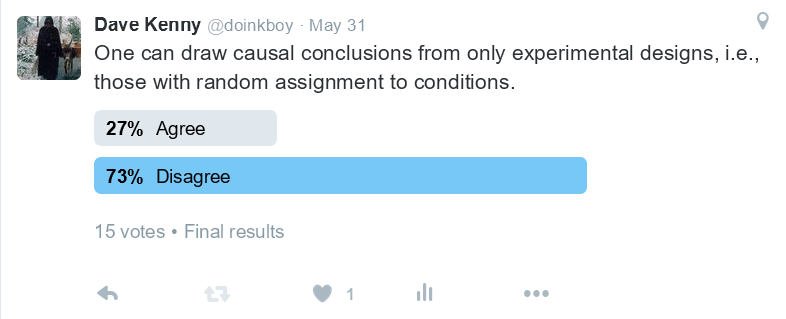

Poll #2: Randomization and Causal Conclusions

June 6, 2016

I plan to post here the results and commentary from occasional Twitter polls that I will be conducting.

I had expected most people to agree with this statement. Many a time, I have heard speaker say in describing a very plausible model, "I cannot make any causal statements because I have not conducted a randomized experiment." I think that "Disagree" winning reflects the non-random selection of those reading the poll.

My vote would be "Disagree." Certainly, experiments are the optimal way to establish causality, but non-experimental studies have their place. First, if we were to limit causal statements to experiments, then we would be unable to study as causal variables those which we we were unable or unwilling because of ethical limitation to manipulate. In the field of close relationships, for example, we could not study the effects of bullying, divorce, and death of spouse on social relationships. Second, while experiments have strong internal validity, they are in no way absolutely perfect. For instance, experiments often turn into non-experiments when there are missing data. Third, causal inference for non-experiments can be improved by longitudinal designs, controls for measurement error, and inclusion of covariates to control for confounders. Following the logic of Pearl's Causality book and my Correlation and Causality book, causal inference with non-experimental is indeed possible.

The first poll below finished on May 21st and the question and the results are as follows:

More details: All assumptions are met and the conclusion is valid. By complete moderation is meant that in one condition the intervention effect is found and in the other condition there is no effect.

The official winner is "complete moderation" by a nose, but there were two votes for "complete mediation" on my Facebook page and if they were included in the total, then "complete mediation" wins by a nose.

My preference is "complete moderation." I am focusing on the understanding the intervention. If a researcher finds a manipulated moderator that can "turn off" the effectiveness of the intervention, then the researcher has a much better understanding of the how the intervention works. Presumably if the researcher has some understanding of what can "turn off" the effect of the intervention, the researcher might also be able to figure out a way "to ramp up" that effect. Complete moderation provides key information about the "active ingredient" of the intervention. If the researcher is focused on the outcome, then finding complete mediation provides a way to find new interventions that have a stronger effect on the outcome.

Perhaps if you are an experimentalist (which I guess I am) you voted for complete moderation, but if you are a prevention scientist or "outcome oriented" you voted for complete mediation.

This is just my take on the issue. Almost certainly, you might have your own take on this issue. Send me an email or post a comment on Twitter, and I might well post your response.

As an aside, if there is a near even split over preference for moderation and mediation, why are there so many papers testing mediation over moderation? One part of that answer is greater power for tests of mediation. Consider an effect that has a d of .4 and 150 participants. Complete moderation would have a d for interaction of .2 with a power of .23. Complete mediation with standardized a and b equal would lead to them both equalling .44 and a power for the indirect effect of virtually 1.00.

Special thanks to Alan R. Johnson and Jeremy J. Taylor who helped me re-write the question.

May 30, 2016